Tracking Wealth Through the AI Lens

Tracking Wealth Through the AI Lens

This week, if you happen to be in Central Texas and the skies are clear, you have two opportunities to witness a minor miracle of engineering. On Tuesday evening, for three minutes starting at 8:43 PM, a brilliant, fast-moving point of light will arc across the southwestern sky. On Wednesday, the show is even better: a full six-minute pass directly overhead, beginning at 7:55 PM. This silent, steady star is, of course, the International Space Station.

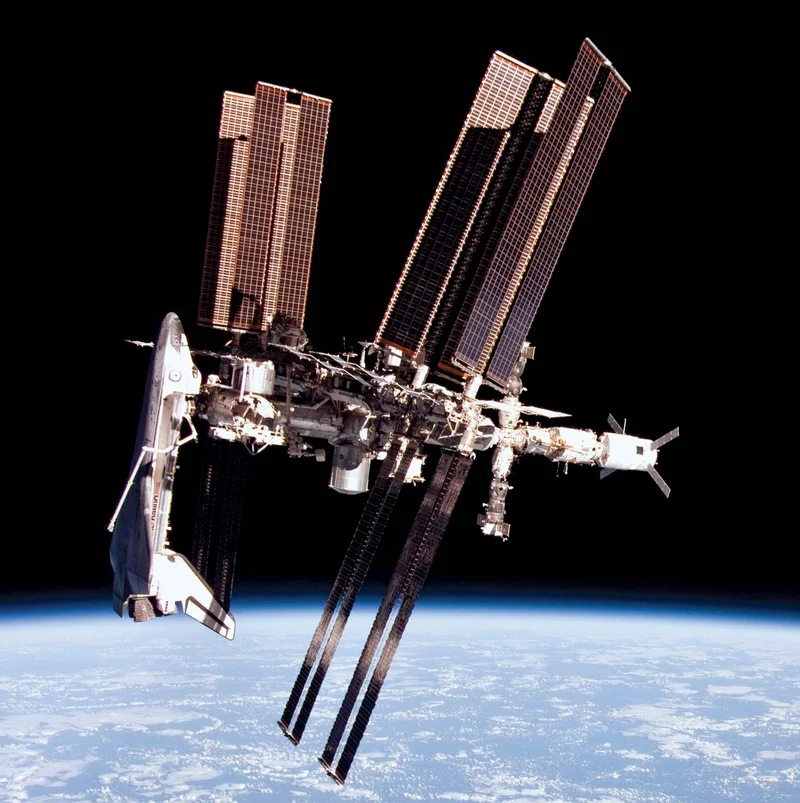

From the ground, the ISS is a clean, abstract concept. It’s a testament to human ingenuity, a symbol of peaceful cooperation moving at 17,500 miles per hour. It reflects the sun’s light down to us, a serene beacon of progress. This is the story we are meant to see.

The official narrative, meticulously curated by NASA, reinforces this image. The station, we are told, is our vital platform for venturing into deep space. After 25 years of continuous human presence, it serves as the essential testbed for the Artemis missions to the Moon and, eventually, Mars. The language is aspirational. The ISS is where we master new environments, learn self-sufficiency, and prepare for the final frontier. We see astronauts harvesting chile peppers, 3D-printing tools from recycled plastic, and achieving 98% water recovery—a crucial metric for long-duration travel. It is presented as a logical, sequential, and successful research program. Step one, step two, step three.

The data points are impressive in isolation. Over 50 species of plants grown. The first metal part 3D-printed in orbit. Laser communications tested. Each is a press release, a success story, a justification for the station’s continued existence (and its estimated $100 billion-plus lifetime cost). The ISS, in this telling, is a springboard. It is the solid ground from which we will leap to other worlds.

But a complete data set requires looking at not just the successes, but the anomalies. And this is the part of the analysis that I find genuinely troubling. The story of the ISS is not one of serene, linear progress. It’s a story of constant, high-stakes crisis management.

The Anomaly Log

The public relations narrative omits the operational volatility. It smooths over the incidents that suggest the entire enterprise is far more fragile than we are led to believe. Let’s examine the outlier events, which seem to occur with alarming regularity.

In July 2021, Russia’s newly arrived Nauka module was supposed to be a triumphant upgrade. Instead, hours after docking, a software glitch caused its thrusters to fire uncontrollably. The entire space station, a multi-hundred-ton structure larger than a football field, began to spin. It rotated one and a half times—to be more exact, about 540 degrees—before flight controllers on two continents, firing counter-thrusters on other modules, could wrestle it back under control. The incident was resolved, but the underlying vulnerability is staggering. A single software error nearly sent the world’s most expensive piece of hardware into an unrecoverable tumble.

Then there are the leaks. Air is the most precious, non-renewable resource in a closed system. In August 2018, the crew was woken by alarms signaling a drop in cabin pressure. The source was eventually traced to a 2-millimeter hole in a docked Russian Soyuz spacecraft. A pinprick. The crew patched it with epoxy and gauze, but the cause remains a subject of debate—a manufacturing flaw, a micrometeorite, or something else. Four years later, in December 2022, another Soyuz capsule, the MS-22, began venting its coolant into space. The culprit this time was a suspected micrometeorite impact that punched a tiny 0.8mm hole in an external radiator. The immediate consequence was that the capsule, intended as a lifeboat for three crew members, was rendered unsafe for re-entry. The astronauts were effectively stranded in orbit without a guaranteed ride home until a replacement could be launched months later.

My analysis of risk models for complex systems suggests that the frequency and severity of these incidents are significant. They are not minor hiccups; they are systemic warnings. A routine spacewalk in July 2013 became a near-drowning in the vacuum of space when Italian astronaut Luca Parmitano’s helmet began filling with water from a malfunctioning cooling system. He was blinded and disoriented, making his way back to the airlock by feel while his colleagues guided him verbally. This wasn't a structural failure of the station, but a failure of a single, critical piece of life-support equipment. It demonstrates that the margin for error is functionally zero.

This pattern of risk extends to the very process of getting there. The dramatic launch abort of the Soyuz MS-10 in 2018, which subjected its crew to a harrowing 7G ballistic re-entry, is often cited as proof of the system's robust safety features. And it is. But it is also proof that the fundamental process of reaching low-Earth orbit remains a razor’s-edge gamble.

The official reports on these events always, quite correctly, highlight the ingenuity and calm professionalism of the astronauts and ground controllers who avert disaster. But this focus on successful mitigation obscures the more important question: why are these near-catastrophic failures happening so often? The narrative of a stable research platform paving the way to Mars is difficult to reconcile with the reality of an aging outpost that has nearly spun out of control, repeatedly sprung leaks, and almost drowned one of its occupants.

The station’s architecture itself presents a critical, and often unmentioned, dependency risk. While NASA has successfully demonstrated the ability for a SpaceX Dragon to reboost the station’s orbit, reducing reliance on Russian Progress vehicles, the core operational brain of the ISS resides in the Russian Zvezda module. The main command-and-control computers, supplied by the European Space Agency, are located there. The operational entanglement is absolute. This isn't a partnership of convenience; it is a structural single point of failure that is subject not only to technical decay but geopolitical turbulence.

When you factor in the station’s planned deorbit in 2030, the entire "springboard to Mars" narrative comes under pressure. The ISS will be at the bottom of the Pacific Ocean long before any human sets foot on Martian soil. Its function is not to directly enable that mission, but to provide data. And the most valuable data it may be providing is not on growing vegetables, but on how to manage a fantastically complex, interdependent, and aging asset at the absolute edge of technological and human endurance.

The International Space Station is an extraordinary achievement, but we must be precise about what kind of achievement it is. It is not the serene laboratory of the future we see in promotional videos. It is a high-orbit, high-stakes exercise in legacy systems management. Its greatest ongoing experiment is its own survival. The primary lesson the ISS offers for future missions to the Moon and Mars may not be how to build a new world, but a stark, multi-billion-dollar case study in just how hard it is to keep an old one from falling apart.

Reference article source: